Circuit Breaker

Intent

Handle costly remote service calls in such a way that the failure of a single service/component cannot bring the whole application down, and we can reconnect to the service as soon as possible.

Explanation

Real world example

Imagine a web application that has both local files/images and remote services that are used for fetching data. These remote services may be either healthy and responsive at times, or may become slow and unresponsive at some point of time due to variety of reasons. So if one of the remote services is slow or not responding successfully, our application will try to fetch response from the remote service using multiple threads/processes, soon all of them will hang (also called thread starvation) causing our entire web application to crash. We should be able to detect this situation and show the user an appropriate message so that he/she can explore other parts of the app unaffected by the remote service failure. Meanwhile, the other services that are working normally, should keep functioning unaffected by this failure.

In plain words

Circuit Breaker allows graceful handling of failed remote services. It’s especially useful when all parts of our application are highly decoupled from each other, and failure of one component doesn’t mean the other parts will stop working.

Wikipedia says

Circuit breaker is a design pattern used in modern software development. It is used to detect failures and encapsulates the logic of preventing a failure from constantly recurring, during maintenance, temporary external system failure or unexpected system difficulties.

Programmatic Example

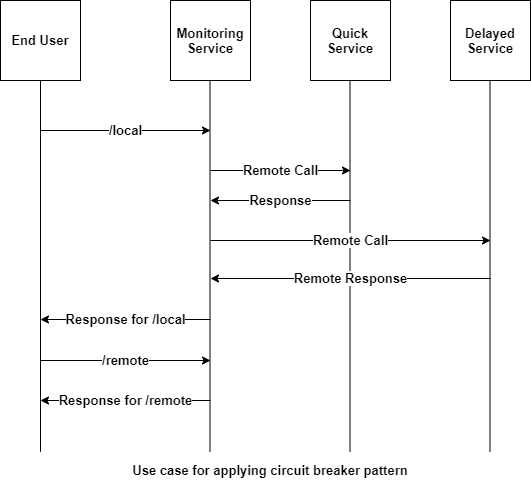

So, how does this all come together? With the above example in mind we will imitate the functionality in a simple example. A monitoring service mimics the web app and makes both local and remote calls.

The service architecture is as follows:

In terms of code, the end user application is:

1@Slf4j

2public class App {

3

4 private static final Logger LOGGER = LoggerFactory.getLogger(App.class);

5

6 /**

7 * Program entry point.

8 *

9 * @param args command line args

10 */

11 public static void main(String[] args) {

12

13 var serverStartTime = System.nanoTime();

14

15 var delayedService = new DelayedRemoteService(serverStartTime, 5);

16 var delayedServiceCircuitBreaker = new DefaultCircuitBreaker(delayedService, 3000, 2,

17 2000 * 1000 * 1000);

18

19 var quickService = new QuickRemoteService();

20 var quickServiceCircuitBreaker = new DefaultCircuitBreaker(quickService, 3000, 2,

21 2000 * 1000 * 1000);

22

23 //Create an object of monitoring service which makes both local and remote calls

24 var monitoringService = new MonitoringService(delayedServiceCircuitBreaker,

25 quickServiceCircuitBreaker);

26

27 //Fetch response from local resource

28 LOGGER.info(monitoringService.localResourceResponse());

29

30 //Fetch response from delayed service 2 times, to meet the failure threshold

31 LOGGER.info(monitoringService.delayedServiceResponse());

32 LOGGER.info(monitoringService.delayedServiceResponse());

33

34 //Fetch current state of delayed service circuit breaker after crossing failure threshold limit

35 //which is OPEN now

36 LOGGER.info(delayedServiceCircuitBreaker.getState());

37

38 //Meanwhile, the delayed service is down, fetch response from the healthy quick service

39 LOGGER.info(monitoringService.quickServiceResponse());

40 LOGGER.info(quickServiceCircuitBreaker.getState());

41

42 //Wait for the delayed service to become responsive

43 try {

44 LOGGER.info("Waiting for delayed service to become responsive");

45 Thread.sleep(5000);

46 } catch (InterruptedException e) {

47 e.printStackTrace();

48 }

49 //Check the state of delayed circuit breaker, should be HALF_OPEN

50 LOGGER.info(delayedServiceCircuitBreaker.getState());

51

52 //Fetch response from delayed service, which should be healthy by now

53 LOGGER.info(monitoringService.delayedServiceResponse());

54 //As successful response is fetched, it should be CLOSED again.

55 LOGGER.info(delayedServiceCircuitBreaker.getState());

56 }

57}

The monitoring service:

1public class MonitoringService {

2

3 private final CircuitBreaker delayedService;

4

5 private final CircuitBreaker quickService;

6

7 public MonitoringService(CircuitBreaker delayedService, CircuitBreaker quickService) {

8 this.delayedService = delayedService;

9 this.quickService = quickService;

10 }

11

12 //Assumption: Local service won't fail, no need to wrap it in a circuit breaker logic

13 public String localResourceResponse() {

14 return "Local Service is working";

15 }

16

17 /**

18 * Fetch response from the delayed service (with some simulated startup time).

19 *

20 * @return response string

21 */

22 public String delayedServiceResponse() {

23 try {

24 return this.delayedService.attemptRequest();

25 } catch (RemoteServiceException e) {

26 return e.getMessage();

27 }

28 }

29

30 /**

31 * Fetches response from a healthy service without any failure.

32 *

33 * @return response string

34 */

35 public String quickServiceResponse() {

36 try {

37 return this.quickService.attemptRequest();

38 } catch (RemoteServiceException e) {

39 return e.getMessage();

40 }

41 }

42}

As it can be seen, it does the call to get local resources directly, but it wraps the call to remote (costly) service in a circuit breaker object, which prevents faults as follows:

1public class DefaultCircuitBreaker implements CircuitBreaker {

2

3 private final long timeout;

4 private final long retryTimePeriod;

5 private final RemoteService service;

6 long lastFailureTime;

7 private String lastFailureResponse;

8 int failureCount;

9 private final int failureThreshold;

10 private State state;

11 private final long futureTime = 1000 * 1000 * 1000 * 1000;

12

13 /**

14 * Constructor to create an instance of Circuit Breaker.

15 *

16 * @param timeout Timeout for the API request. Not necessary for this simple example

17 * @param failureThreshold Number of failures we receive from the depended service before changing

18 * state to 'OPEN'

19 * @param retryTimePeriod Time period after which a new request is made to remote service for

20 * status check.

21 */

22 DefaultCircuitBreaker(RemoteService serviceToCall, long timeout, int failureThreshold,

23 long retryTimePeriod) {

24 this.service = serviceToCall;

25 // We start in a closed state hoping that everything is fine

26 this.state = State.CLOSED;

27 this.failureThreshold = failureThreshold;

28 // Timeout for the API request.

29 // Used to break the calls made to remote resource if it exceeds the limit

30 this.timeout = timeout;

31 this.retryTimePeriod = retryTimePeriod;

32 //An absurd amount of time in future which basically indicates the last failure never happened

33 this.lastFailureTime = System.nanoTime() + futureTime;

34 this.failureCount = 0;

35 }

36

37 // Reset everything to defaults

38 @Override

39 public void recordSuccess() {

40 this.failureCount = 0;

41 this.lastFailureTime = System.nanoTime() + futureTime;

42 this.state = State.CLOSED;

43 }

44

45 @Override

46 public void recordFailure(String response) {

47 failureCount = failureCount + 1;

48 this.lastFailureTime = System.nanoTime();

49 // Cache the failure response for returning on open state

50 this.lastFailureResponse = response;

51 }

52

53 // Evaluate the current state based on failureThreshold, failureCount and lastFailureTime.

54 protected void evaluateState() {

55 if (failureCount >= failureThreshold) { //Then something is wrong with remote service

56 if ((System.nanoTime() - lastFailureTime) > retryTimePeriod) {

57 //We have waited long enough and should try checking if service is up

58 state = State.HALF_OPEN;

59 } else {

60 //Service would still probably be down

61 state = State.OPEN;

62 }

63 } else {

64 //Everything is working fine

65 state = State.CLOSED;

66 }

67 }

68

69 @Override

70 public String getState() {

71 evaluateState();

72 return state.name();

73 }

74

75 /**

76 * Break the circuit beforehand if it is known service is down Or connect the circuit manually if

77 * service comes online before expected.

78 *

79 * @param state State at which circuit is in

80 */

81 @Override

82 public void setState(State state) {

83 this.state = state;

84 switch (state) {

85 case OPEN -> {

86 this.failureCount = failureThreshold;

87 this.lastFailureTime = System.nanoTime();

88 }

89 case HALF_OPEN -> {

90 this.failureCount = failureThreshold;

91 this.lastFailureTime = System.nanoTime() - retryTimePeriod;

92 }

93 default -> this.failureCount = 0;

94 }

95 }

96

97 /**

98 * Executes service call.

99 *

100 * @return Value from the remote resource, stale response or a custom exception

101 */

102 @Override

103 public String attemptRequest() throws RemoteServiceException {

104 evaluateState();

105 if (state == State.OPEN) {

106 // return cached response if the circuit is in OPEN state

107 return this.lastFailureResponse;

108 } else {

109 // Make the API request if the circuit is not OPEN

110 try {

111 //In a real application, this would be run in a thread and the timeout

112 //parameter of the circuit breaker would be utilized to know if service

113 //is working. Here, we simulate that based on server response itself

114 var response = service.call();

115 // Yay!! the API responded fine. Let's reset everything.

116 recordSuccess();

117 return response;

118 } catch (RemoteServiceException ex) {

119 recordFailure(ex.getMessage());

120 throw ex;

121 }

122 }

123 }

124}

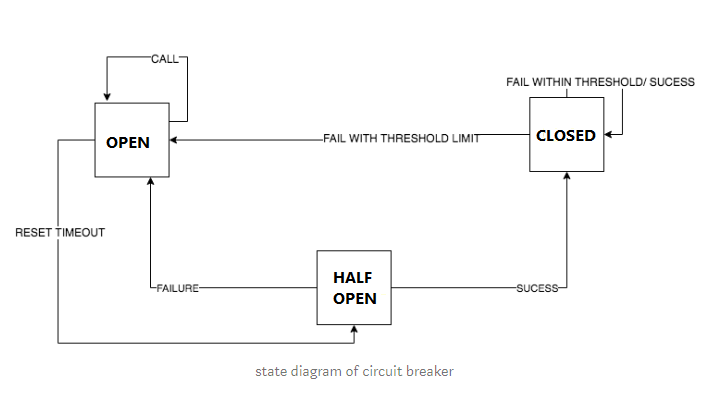

How does the above pattern prevent failures? Let’s understand via this finite state machine implemented by it.

- We initialize the Circuit Breaker object with certain parameters:

timeout,failureThresholdandretryTimePeriodwhich help determine how resilient the API is. - Initially, we are in the

closedstate and nos remote calls to the API have occurred. - Every time the call succeeds, we reset the state to as it was in the beginning.

- If the number of failures cross a certain threshold, we move to the

openstate, which acts just like an open circuit and prevents remote service calls from being made, thus saving resources. (Here, we return the response calledstale response from API) - Once we exceed the retry timeout period, we move to the

half-openstate and make another call to the remote service again to check if the service is working so that we can serve fresh content. A failure sets it back toopenstate and another attempt is made after retry timeout period, while a success sets it toclosedstate so that everything starts working normally again.

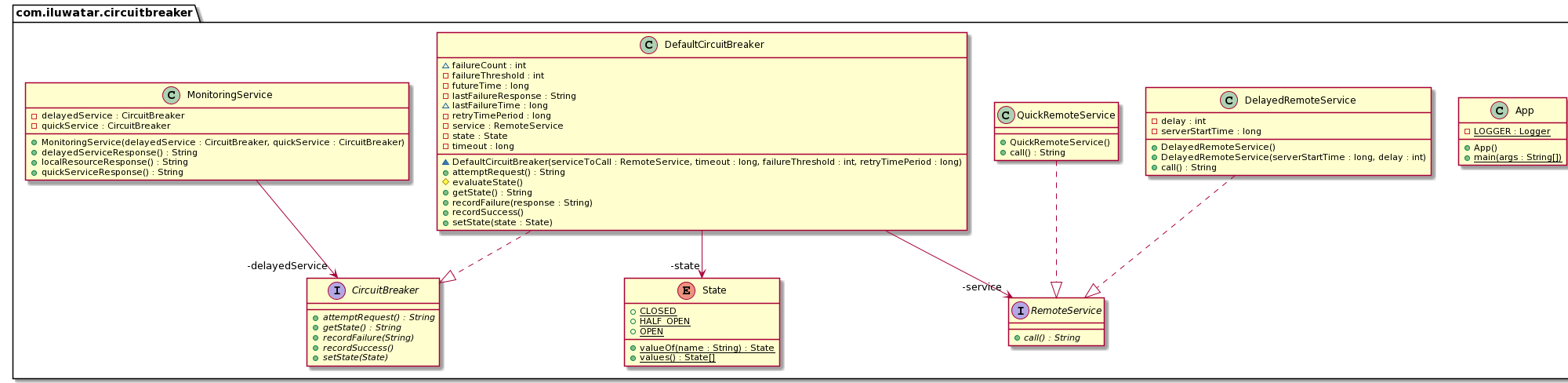

Class diagram

Applicability

Use the Circuit Breaker pattern when

- Building a fault-tolerant application where failure of some services shouldn’t bring the entire application down.

- Building a continuously running (always-on) application, so that its components can be upgraded without shutting it down entirely.